Unlock the power of the patient's voice with speech analytics

——

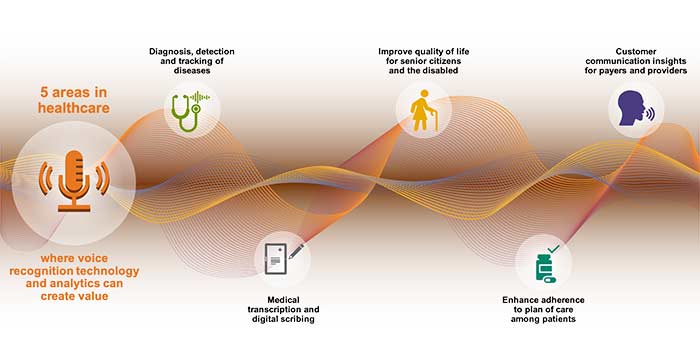

Five ways voice recognition technology and speech analytics can help health care work better for everyone.

Recent advances achieved through the use of deep neural networks (DNNs), a form of machine learning (ML), have made artificial intelligence (AI) a household term. One of the goals of AI is to recognize and understand human speech across all languages. To this end, DNN-based models built by the internet technology giants continue to break records on some of the well-known speech recognition benchmarks, such as the LibriSpeech Automatic Speech Recognition benchmark.

This has led to an explosion of activity in building voice-based applications and speech analytics software, with CB Insights estimating the market size for voice recognition to hit $49 billion in a couple of years, and VC funding tripling in a year from $0.5 billion in 2018 to around $1.5 billion in 2019.

In this article, we look at five areas in health care, where voice recognition technology and voice analytics can unlock value to help people live healthier lives and help make the health system work better for everyone.

-

OR

Voice technology to identify diseases

An emerging speech analytics use case is the use of vocal biomarkers to detect and diagnose diseases ahead of time. A patient’s voice, speech patterns and breathing rhythm hold clues to underlying conditions. For example, there is research underway on early detection of Alzheimer’s and Dementia by using a patient’s speech patterns.

Another example is how lung auscultation, the process of listening to lung sounds, can be performed using a digital stethoscope. The solution comprises of: (a) an edge computing device, such as Arduino; (b) a convolutional recurrent neural network trained to recognize various lung sounds, such as rhonchi, wheezes, coarse and fine crackles; and (c) a framework for deploying deep learning models on the edge, such as Tensorflow.js.

In 2018, it was also reported that a patent was filed for being able to recognize if a user has a cold or flu through a smart speaker based on subtle changes in voice. Thus, the area of using vocal biomarkers or voice technology for diagnosis is poised for growth — research reports expect close to 15% CAGR, with the overall market reaching a size of $2.5 billion in the next 2-3 years.

Medical transcription and digital scribing

As per certain surveys, more than 50% of physicians in the US report burnout, with a major contributor being the time spent in documenting patient visits into the electronic health record (EHR) systems. Voice analytics software or dictation software, specifically tailored for health care and medical applications, has been able to make rapid strides in this space, not just reducing physician burnout, but also reducing overall costs by eliminating the need for human assistants to take notes.

Automatic speech recognition (ASR) software in today’s world is usually comprised of three components: (a) A lexicon, which is a list that has two entries in each row: a word and its pronunciation; (b) An acoustic model, which takes a small frame of audio and predicts which phoneme was spoken; and (c) A language model that can accurately predict the next word in a sentence, trained specifically for medical scenarios and vocabulary.

Last year, OrthoAtlanta, a 14-office group practice with 37 physicians, reported saving an hour a day per physician using AI-powered dictation software, with the average time taken to complete a note reportedly dropping from 4.8 minutes to 1.6 minutes.

Improving patients' quality of life

Elderly patients are often confined to their homes, unable to use technologies that require good vision and nimble fingers. Voice technology, through smart speakers and AI-based voice assistants, can help these senior citizens with scheduling and keeping appointments, staying connected with family members and other daily tasks.

Similarly, the quality of life of patients with speech or hearing difficulties can be improved by converting speech to text or vice versa. For example, roughly a quarter of patients with amyotrophic lateral sclerosis (ALS) suffer from slurred speech. Voice technology solutions help these patients by converting non-standard speech to standard, helping summon caregivers, creating live subtitles during a group conversation, showing who says what and so on.

ASR solutions in this space must overcome the twin challenges of variations in patterns among patients with the same type of atypical speech, as well as collecting sufficient amount of non-standard speech training data from a patient, as opposed to volunteers contributing training data for standard speech.

Help improve adherence among patients

Around 50% of patients with chronic conditions, such as diabetes, hypertension or asthma, often find it difficult to adhere to their plan of care. To this end, quite a few startups have built applications to focus on aspects such as: routinely asking questions regarding a patient’s condition and offering reminders; AI assistants that can provide clinically approved advice and recommendations at home, etc.

The solution approach here involves: (a) Analytics to identify members who are likely to not adhere to their plan of care, based on historical treatment and pill reordering data; (b) Designing the right interventions and identifying the right time to deliver those interventions; and (c) Engineering the technology ecosystem centered around smart speakers and mobile apps to deliver the intervention.

Voice analytics for customer satisfaction

Large health care organizations use some form of customer satisfaction metric, such as Net Promoter Score or NPS, assessed through a post-call survey when digital penetration is low. The feedback from such surveys helps an organization design a program for improving customer satisfaction.

Voice analytics helps provide inputs to such programs by: (a) Making highly accurate call transcripts available by using appropriate speech-to-text technologies through established commercial vendors, cloud service providers or home-grown transcription solutions; and (b) Applying text mining (keyword spotting, etc.) and natural language processing methods or NLP (sentiment analysis, entity extraction, summarization, etc.) to provide intelligence and insights.

Additionally, voice analytics helps health care organizations with large call centers with their quality audits that examine the behavior of an agent during a call, i.e., whether the agent greeted the caller appropriately, whether the agent was courteous throughout the call, whether certain standard steps required as per compliance norms were carried out, etc.

Quite a few of these steps can be automated by applying NLP techniques on top of a call transcript. Such auto-audit programs can bring about significant savings in terms of operational cost.

Adoption and usage of voice technology

As with all things related to voice recognition, privacy concerns will continue to dominate and determine eventual adoption and usage, especially in the patient space. Additionally, one of the key challenges organizations will face is the dismantling of existing technology systems and workflows to integrate voice recognition applications.

However, given the explosive growth in smart speakers, advances in natural language processing research, and the kinds of problems that voice technology and speech analytics can solve, exciting opportunities abound not just for organizations but also for talented technologists, data scientists and research engineers, enabling them to do their life’s best work.